Last Updated on: 25th May 2024, 09:22 pm

Key takeaways from this article:

- Contextual SDA passed both quality tests we performed and described in this article

- The mechanisms we used to assess the contextual SDA signals’ quality were: SSPs’ audiences sent via Deal IDs and RTB House’s proprietary ContextAI engine

Foreword

Here’s a quick recap of what we need to take into account before reading this article:

- Seller-Defined Audiences is gaining momentum (the observed scale almost doubled in the last 4 months – as of Feb 2023) but mostly on the sell-side, and there’s a large differentiation of sources, methodologies, leveraged taxonomies, and segments across the signals we received.

- It can’t be taken for granted that the signals being sent comply with SDA specifications and are of good quality.

- There are 2 types of SDA signals: one treating the content of the given website (AKA contextual SDA) and the other describing audiences and their behavior across the publisher premises (AKA user SDA).

In connection with all of the above, we felt performing tests to judge the quality of the SDA signals was necessary. As the nature of both types differs, these tests have to be approached differently. Let’s focus on contextual SDA in this article. There were 2 solutions we decided to use in order to test the reliability of contextual SDA signals: audience-based Deal IDs and our proprietary ContextAI engine.

Table of Contents:

- Test 1: Deal IDs vs. contextual Seller-Defined Audiences

- Test 2: Context AI vs. contextual Seller-Defined Audiences

- What’s the verdict?

Test 1: Deal IDs vs. contextual Seller-Defined Audiences

Assumption: Commonly used audience-based Deal ID is a reliable tool for contextual targeting

Hypothesis: Contextual SDA is a reliable tool for contextual targeting

Selected segment for the test: Pets (ID 422 from IAB Tech Lab Content Taxonomy 2.0-2.2)

Approach:

- Reaching out to top SDA providers and/or SSPs, which were active in the selected contextual SDA category, and asking them to create a Deal ID based on only contextual data for ID 422 from IAB Tech Lab Content Taxonomy 2.0-2.2 (“Pets”) and send it to RTB House

- Identifying bid requests with both a contextual SDA signal and requested deal ID

- Identifying match rate between contextual SDA signals and Deal IDs in ID 422 segment

- Identifying contextual SDAs other than “Pets” that came in parallel to our requested Deal IDs to manually investigate which of the solutions returned better results

Measure of success: Match rate

Exceptions: Match rates might be lower in instances where our core assumption was wrong. To take into account that contextual SDA might be of higher quality than Deal IDs, Step IV in the “approach” section was created. It would enable us to perform additional deep dives as needed.

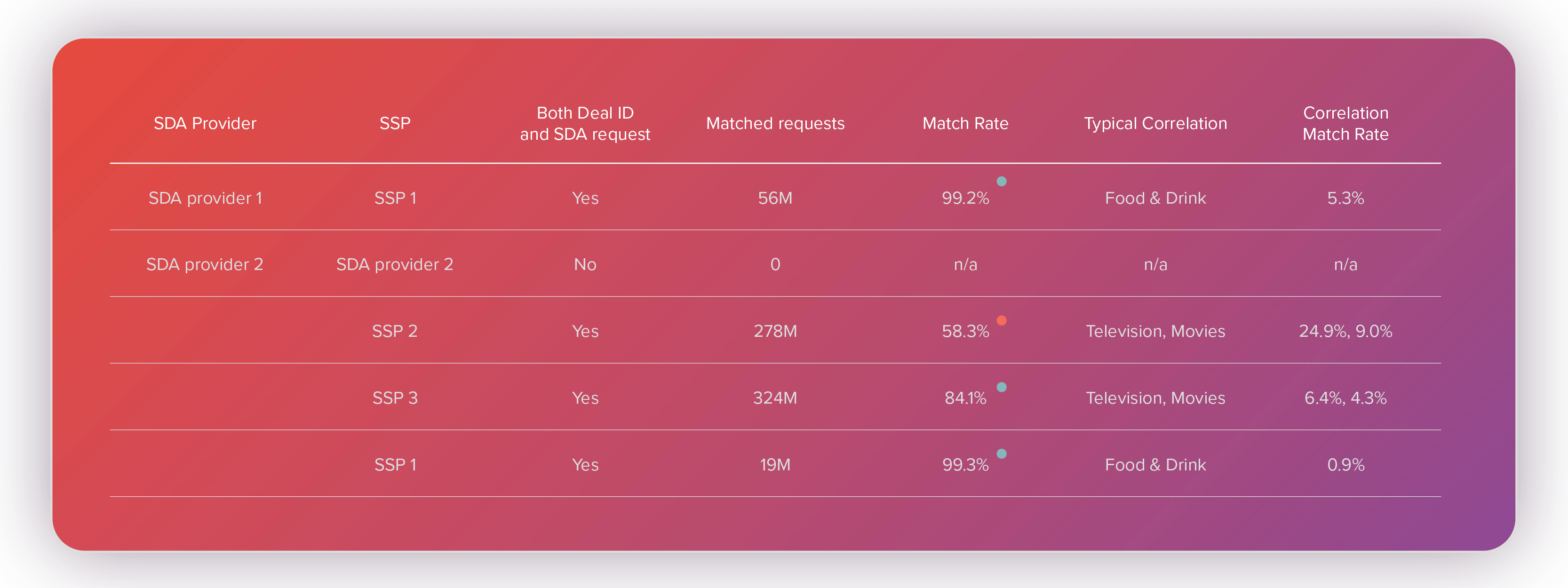

Test: It turned out that for our selected segment – “Pets” – there were 2 major SDA providers. We reached out to them and received 2 unique pets-oriented Deal IDs based on contextual data, for one of which we found an overlapping range of 56M bid requests with contextual SDA signals. For the other one, there wasn’t a single bid request, which simultaneously contained an SDA and Deal ID, so we needed to reach out to the most active SSPs instead in the hope of some significant overlap. This approach, in turn, was fruitful and the results were as follows:

“Typical Correlation” marks the other most popular contextual SDAs other than the expected “Pets” which came in parallel to our requested Deal IDs. The green dots mark the success criteria met. The red dot marks that while the correlation is there, it isn’t good enough. We investigated the only compliant1 SDA signals collected through 4Q 2022.

All match rates were at a satisfactory level, with the exception of SSP 2’s Deal ID. A follow-up was performed to understand which URLs drove the 25% “Television” mismatch against the expected “Pets”. It turned out that while the domain overall (https://horseyhooves.com/) addresses horse enthusiasts, the specific URLs were about a TV series (Yellowstone) that has horses in it. Therefore, the Seller-Defined Audiences performed better than the Deal ID in this case. If we accounted for all TV/movies mismatches to be matches (the most popular URLs were manually checked and fall into the described rationale), the match rate would equate to around 83%.

In fact, Deal IDs by SSP1 and SSP3 would also present a better match rate with just the same reasoning. For SSP1, however, the mismatch would involve the “Food & Drink” (rather than “Television”) SDA label in bid requests which turned out to refer to sites about dog/cat food.

Test 2: Context AI vs. contextual Seller-Defined Audiences

Assumption: RTB House’s proprietary Context AI is a reliable tool for contextual targeting

Hypothesis: Contextual SDA is a reliable tool for contextual targeting

Selected segment for the test: Top 10 most popularly deployed SDA segments across all collected contextual SDA signals

Approach:

- Mapping SDA segments into Context AI labels

- Identifying match rates between the 10 most common categories of contextual SDA signals and ContextAI labels

Measure of success: Match rate

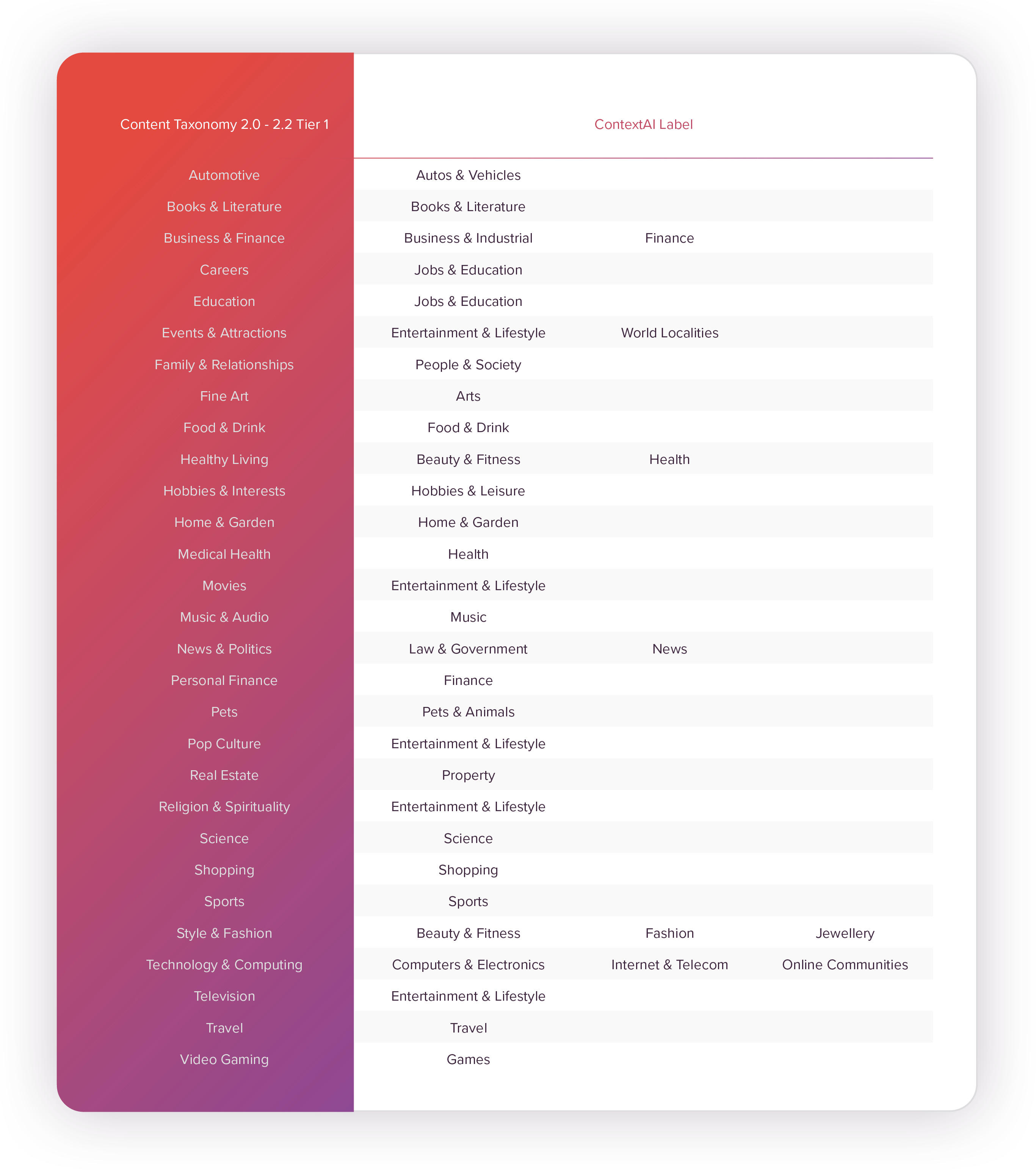

Test: As labels leveraged for ContextAI differ from SDA segments, they needed to be mapped one to another. If an SDA signal (on the left-hand side) is accompanied by either ContextAI labels (on the right-hand side), it is considered a “match”. Otherwise, we have a “mismatch”. In Figure 2, you can see a mapping table defining the correct matching for IAB Tech Lab Content Taxonomies 2.0-2.2, which were by far the most popular ones leveraged by SDA providers2. We chose the Tier 1 segment for our test as it was at a similar level of granularity to our ContextAI labels. Also, to complete an overview with newer (but less popular) IAB Tech Lab Content Taxonomies 3.0, we mapped it into the 2.0-2.2 segmentation. For the most part, it was identical on the Tier 1 level with just a few exceptions which required us to get into Tier 2. Figure 2: The “Sensitive Topics” segment from the Content Taxonomy ruled out from the analysis because there aren’t any Context AI labels which could be mapped against it

Figure 2: The “Sensitive Topics” segment from the Content Taxonomy ruled out from the analysis because there aren’t any Context AI labels which could be mapped against it

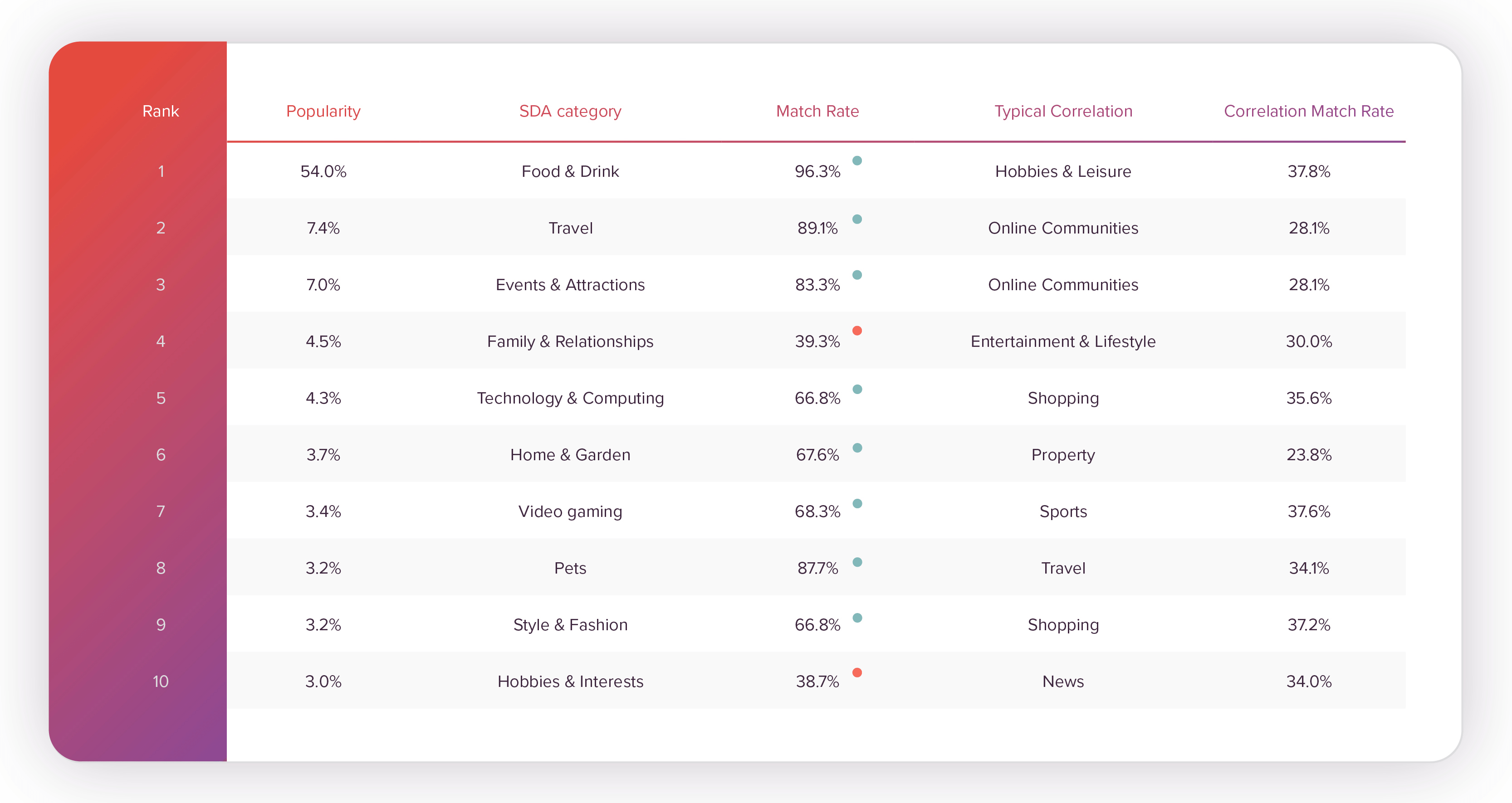

After running the presented mapping, the following match rates emerged.

Figure 3

Figure 3

“Popularity” indicates the % of the received bid requests that had a compliant contextual SDA signal with a given category from IAB Tech Lab Content Taxonomy.

“Match Rate” marks the % of the bid requests with a certain contextual SDA category that had ContextAI labels mapped to it in accordance with Figure 2 (green if >60%, otherwise red).

“Typical Correlation” marks the other (outside of the agreed mapping) most popular Context AI labels.

We investigated only compliant SDA signals collected through 4Q 2022. A total of 130B bid requests were analyzed.

Analyzing Figure 3 brings us to the conclusion that only 2 Seller-Defined Audiences categories presented lower-than-expected match rates. These were “Family and Relationships” and “Hobbies & Interests”. After analyzing the top URLs for the first one, we observed they were mostly about celebrities, which contradicts its taxonomy definition (celebrities should be covered by the “Pop Culture” segment). At the same time, there is no adequate ContextAI label to prove “Family and Relationships” correct, only the generic “People & Society”.

As for “Hobbies & Interests”, the most common ContextAI label was “News” and after investigating, it turns out that the biggest contributor to this misalignment is the very generic URL – https://www.msn.com/en-us – which serves news all across interests/industries/the world. Our hypothesis is that “Hobbies & Interests” might be a tricky topic to define, as many other segments like “Sports”, “Travel”, and “Pets”, might constitute one’s hobby and give a tag “Hobbies & Leisure” in parallel to “Sports” that doesn’t really add much value.

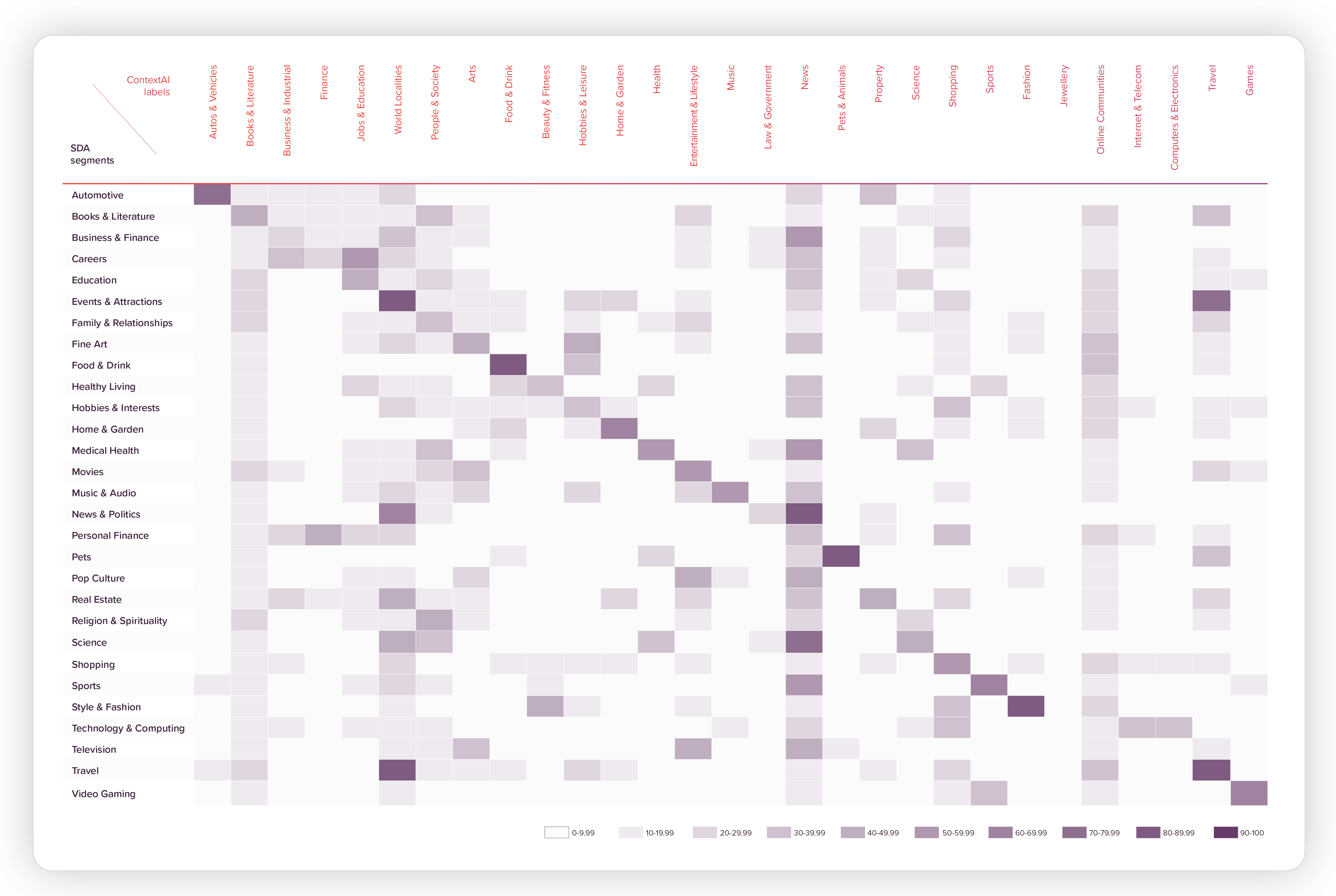

The following heatmap fully visualizes how Tier 1 categories from IAB Tech Lab Content Taxonomies aligned with our ContextAI labels. It shows in what percentage of the given SDA contextual signal the specific ContextAI label is sent in parallel.

Figure 4

What’s the verdict?

These 2 major tests proved that if we only looked at the compliant contextual SDA signals, they were of solid quality, for the most part, with only a few exceptions. We conclude that contextual SDA passed both quality tests, especially for this early stage of adoption.

If you have any questions, comments or issues, or you’re interested in meeting with us, please get in touch.