Last Updated on: 25th May 2024, 09:22 pm

Key takeaways from this article:

- User SDA passed both quality tests we performed and described in this article.

- The methods used to assess the quality of contextual SDA signals were 1) a manual Incognito mode verification of the mechanism assigning users to interest groups, and 2) a survey campaign to examine the relevancy of the targeted audiences.

Foreword

This article aims to complete the round of quality tests performed on the sampled Seller-Defined Audiences signals we received from the sell side. While the previous article investigated contextual SDAs, this one will focus on user SDAs and verify how they reflect reality. The tests in this section varied from the ones performed on the contextual signals as the nature of the user type differs. The first test relied on a manual check of an assignment mechanism, while the second one was based on a survey campaign.

Table of Contents:

- Test 1: A verification of the mechanism assigning users to interest segments

- Test 2: A survey campaign test of the relevancy of users with assigned user SDAs

- What’s the verdict?

Test 1: A verification of the mechanism assigning users to interest segments

Assumption: The assignment of users to the right interest group is essential for the quality of any user SDA signal.

Background: After conducting an interview with a publisher regarding the logic it applies to assign users to interest segments, we learned that a user was claimed to be interested in topic “X” after visiting websites with “X” contextual SDA labels three times.

Hypothesis: We can trust the publisher’s declarations about the methodology of creating user SDAs.

Selected segment for the test: “X” (from the IAB Tech Lab Audience Taxonomy 1.1).

Approach:

- Going to the selected URL where we expected to have user SDAs from that publisher – in Chrome’s Incognito mode with the “Block third-party cookies” setting turned off and the cookie consent window selection either “accept” or “reject”, regardless of the VPN tool status (either “on” or “off”).

- Visiting the same URL three times and controlling the user’s SDA status in the Developer Console.

Measure of success: A positive validation of whether the assignment of a certain interest group adheres to the publisher’s claims (binary: “Yes” or “No”).

Exceptions: This manual test checks the assignment in an extremely controlled and narrow environment, so the results are specific to the selected publisher and URL

Results: The test returned the expected “X” user SDA signal precisely after the third visit to the page.

Test 2: A survey campaign test of the relevancy of users with assigned user SDAs

Assumption: A majority of our surveys were answered truthfully.

Hypothesis: Users with a certain interest are more inclined to answer “Yes” to the questions asked through our surveys.

Selected segment for the test: “Cooking” (ID 371 from the IAB Tech Lab Audience Taxonomy 1.1). It is a subset of “Food & Drink” (ID 368 from the IAB Tech Lab Audience Taxonomy 1.1)

Approach:

- Creating questions that test users’ interest in “Cooking”.

- Compiling a list of domains rich with user SDA signals.

- Setting up an A/B test for users with “Cooking” user SDA as the test group and all users – regardless of their user-SDA type as the control group (there were also some users with no Seller-Defined Audiences signals, which will be distinguished as an alternative form of the control group).

- Performing a campaign that would display the created questions to the users.

- Recording the responses and calculating the “Yes” rate (number of “Yes” responses divided by the total number of responses).

Measure of success: Gathering around 500-1000 answers. At least 10% of a relative difference should be recorded between the “Yes” ratio in the test against the control group.

Exceptions: The nature of the test didn’t allow for an equal sampling between the test and the control groups, which might have affected the result. Moreover, the scope of the test was limited to only a few domains.

Timing: The test was spread in a few iterations in the period of Dec 2022 – Apr 2023.

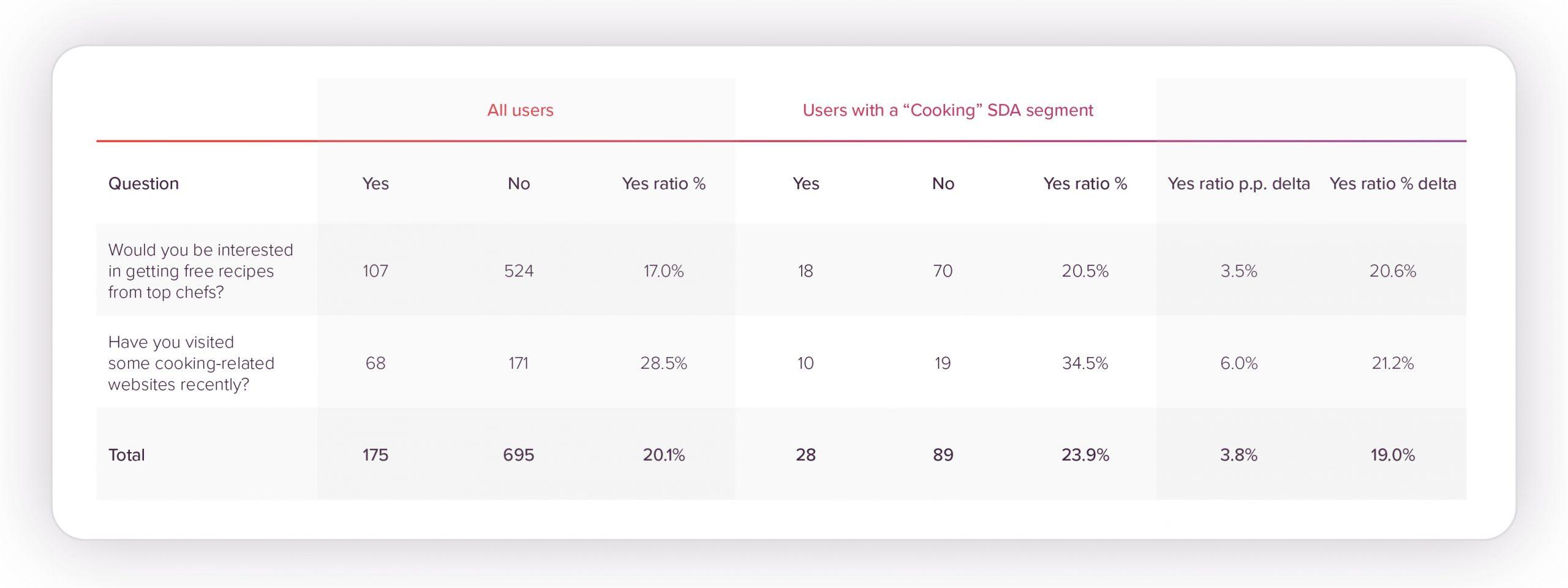

Test: Two questions were presented to the users through the surveys:

- Would you be interested in getting free recipes from top chefs?

- Have you visited some cooking-related websites recently?

Another factor that differentiated the test was the different approach to apply for the control group:

- All bid requests with any SDA from the investigated domains.

- All bid requests from the investigated domains (with and without SDA).

We shortlisted domains to run the survey campaign and landed on eight addresses. The criteria were as follows:

- A dense presence of user SDAs.

- A domain’s main theme was largely unassociated with cooking or even “Food & Drink” as a whole (to rule out the contextual factor from the analysis).

Figure 1

Figure 2

We managed to collect 870 responses. In Figure 1 and Figure 2, it can be observed that while individual questions performed differently (in one instance, heavily in favor of “Would you be interested in getting free recipes from top chefs?), the total result of the test was very similar across both applied control groups. Most importantly, the relative delta of the “Yes” ratio between the test and control groups equaled around 19% – almost twice as large as our minimum requirement for the test to be successful.

What’s the verdict?

The two tests that we conducted checked two different things:

- If the theoretical mechanisms of assigning users to interest groups hold true in practice (a minor manual test).

- If the captured audience with the selected Seller-Defined Audiences segment is more relevant than the one without.

Both user SDA tests were positive, which adds to the successful results of the contextual SDA tests described in Part II of our Seller-Defined Audiences Analysis Series, and concludes the quality tests of SDA signals.

This exercise confirms the vast potential of Seller-Defined Audiences. However, it can only be realized when the scale of adoption gets higher and Seller-Defined Audiences signal compliance is thoroughly looked after – the compliance rate of user SDAs oscillates around as little as 25% (almost 3x lower than the compliance rate for contextual SDAs). Moreover, it’s essential to keep in mind that leveraging user SDAs will only be privacy-preserving if they’re not passed along with other user-specific signals, such as external cross-site IDs, 1st-party IDs, 3rd-party cookies, and others. This currently doesn’t hold true for the vast majority of user SDAs.

These aforementioned pain points were highlighted in Part I of the Seller-Defined Audiences Analysis Series. One should also keep in mind that the test included eight domains with a relatively good quantity and quality of user SDA signals. This should not be taken for granted as many websites still lack any SDAs whatsoever. And even if Seller-Defined Audiences signals are present, their quality may vary radically depending on the publisher and the mechanism used for their creation.

Therefore, it will be a good practice to validate how user SDA signals were created in the first place to avoid using unreliable technologies and nutrition labels (AKA the Data Transparency Standard mentioned in Part I of the Seller-Defined Audiences Analysis Series) should assist in achieving this.

If you have any questions, comments or issues, or you’re interested in meeting with us, please get in touch.